Intel Low Power Sub System Dma Controller Driver For Mac

Direct memory access ( DMA) is a feature of computer systems that allows certain hardware subsystems to access main system independent of the (CPU).Without DMA, when the CPU is using, it is typically fully occupied for the entire duration of the read or write operation, and is thus unavailable to perform other work. With DMA, the CPU first initiates the transfer, then it does other operations while the transfer is in progress, and it finally receives an from the DMA controller (DMAC) when the operation is done. This feature is useful at any time that the CPU cannot keep up with the rate of data transfer, or when the CPU needs to perform work while waiting for a relatively slow I/O data transfer.

Many hardware systems use DMA, including controllers,. DMA is also used for intra-chip data transfer in. Computers that have DMA channels can transfer data to and from devices with much less CPU overhead than computers without DMA channels. Similarly, a inside a multi-core processor can transfer data to and from its local memory without occupying its processor time, allowing computation and data transfer to proceed in parallel.DMA can also be used for 'memory to memory' copying or moving of data within memory. DMA can offload expensive memory operations, such as large copies or operations, from the CPU to a dedicated DMA engine.

To find the latest driver for your computer we recommend running our Free Driver Scan. Intel(R) Low Power Subsystem DMA Controller - Driver Download. Vendor: Microsoft.

An implementation example is the. DMA is of interest in and architectures. Contents.Principles Third-party Standard DMA, also called third-party DMA, uses a DMA controller. A DMA controller can generate and initiate memory read or write cycles. It contains several that can be written and read by the CPU.

These include a memory address register, a byte count register, and one or more control registers. Depending on what features the DMA controller provides, these control registers might specify some combination of the source, the destination, the direction of the transfer (reading from the I/O device or writing to the I/O device), the size of the transfer unit, and/or the number of bytes to transfer in one burst.To carry out an input, output or memory-to-memory operation, the host processor initializes the DMA controller with a count of the number of to transfer, and the memory address to use. The CPU then commands peripheral device to initiate data transfer. The DMA controller then provides addresses and read/write control lines to the system memory. Each time a byte of data is ready to be transferred between the peripheral device and memory, the DMA controller increments its internal address register until the full block of data is transferred.Bus mastering In a system, also known as a first-party DMA system, the CPU and peripherals can each be granted control of the memory bus. Where a peripheral can become bus master, it can directly write to system memory without involvement of the CPU, providing memory address and control signals as required.

Some measure must be provided to put the processor into a hold condition so that bus contention does not occur.Modes of operation Burst mode In burst mode, an entire block of data is transferred in one contiguous sequence. Once the DMA controller is granted access to the system bus by the CPU, it transfers all bytes of data in the data block before releasing control of the system buses back to the CPU, but renders the CPU inactive for relatively long periods of time. The mode is also called 'Block Transfer Mode'.Cycle stealing mode The cycle stealing mode is used in systems in which the CPU should not be disabled for the length of time needed for burst transfer modes.

In the cycle stealing mode, the DMA controller obtains access to the system bus the same way as in burst mode, using BR and BG signals, which are the two signals controlling the interface between the CPU and the DMA controller. However, in cycle stealing mode, after one byte of data transfer, the control of the system bus is deasserted to the CPU via BG. It is then continually requested again via BR, transferring one byte of data per request, until the entire block of data has been transferred. By continually obtaining and releasing the control of the system bus, the DMA controller essentially interleaves instruction and data transfers. The CPU processes an instruction, then the DMA controller transfers one data value, and so on. On the one hand, the data block is not transferred as quickly in cycle stealing mode as in burst mode, but on the other hand the CPU is not idled for as long as in burst mode. Cycle stealing mode is useful for controllers that monitor data in real time.Transparent mode Transparent mode takes the most time to transfer a block of data, yet it is also the most efficient mode in terms of overall system performance.

In transparent mode, the DMA controller transfers data only when the CPU is performing operations that do not use the system buses. The primary advantage of transparent mode is that the CPU never stops executing its programs and the DMA transfer is free in terms of time, while the disadvantage is that the hardware needs to determine when the CPU is not using the system buses, which can be complex. This is also called as 'Hidden DMA data transfer mode'.Cache coherency. Cache incoherence due to DMADMA can lead to problems. Imagine a CPU equipped with a cache and an external memory that can be accessed directly by devices using DMA. When the CPU accesses location X in the memory, the current value will be stored in the cache.

Subsequent operations on X will update the cached copy of X, but not the external memory version of X, assuming a. If the cache is not flushed to the memory before the next time a device tries to access X, the device will receive a stale value of X.Similarly, if the cached copy of X is not invalidated when a device writes a new value to the memory, then the CPU will operate on a stale value of X.This issue can be addressed in one of two ways in system design: Cache-coherent systems implement a method in hardware whereby external writes are signaled to the cache controller which then performs a for DMA writes or cache flush for DMA reads.

Non-coherent systems leave this to software, where the OS must then ensure that the cache lines are flushed before an outgoing DMA transfer is started and invalidated before a memory range affected by an incoming DMA transfer is accessed. The OS must make sure that the memory range is not accessed by any running threads in the meantime. The latter approach introduces some overhead to the DMA operation, as most hardware requires a loop to invalidate each cache line individually.Hybrids also exist, where the secondary L2 cache is coherent while the L1 cache (typically on-CPU) is managed by software.Examples ISA In the original (and the follow-up ), there was only one DMA controller capable of providing four DMA channels (numbered 0–3). These DMA channels performed 8-bit transfers (as the 8237 was an 8-bit device, ideally matched to the PC's CPU/bus architecture), could only address the first (/8088-standard) megabyte of RAM, and were limited to addressing single 64 segments within that space (although the source and destination channels could address different segments). Additionally, the controller could only be used for transfers to, from or between expansion bus I/O devices, as the 8237 could only perform memory-to-memory transfers using channels 0 & 1, of which channel 0 in the PC (& XT) was dedicated to. This prevented it from being used as a general-purpose ', and consequently block memory moves in the PC, limited by the general PIO speed of the CPU, were very slow.With the, the enhanced (more familiarly retronymed as the, or 'Industry Standard Architecture') added a second 8237 DMA controller to provide three additional, and as highlighted by resource clashes with the XT's additional expandability over the original PC, much-needed channels (5–7; channel 4 is used as a cascade to the first 8237). The page register was also rewired to address the full 16 MB memory address space of the 80286 CPU.

This second controller was also integrated in a way capable of performing 16-bit transfers when an I/O device is used as the data source and/or destination (as it actually only processes data itself for memory-to-memory transfers, otherwise simply controlling the data flow between other parts of the 16-bit system, making its own data bus width relatively immaterial), doubling data throughput when the upper three channels are used. For compatibility, the lower four DMA channels were still limited to 8-bit transfers only, and whilst memory-to-memory transfers were now technically possible due to the freeing up of channel 0 from having to handle DRAM refresh, from a practical standpoint they were of limited value because of the controller's consequent low throughput compared to what the CPU could now achieve (i.e., a 16-bit, more optimised running at a minimum of 6 MHz, vs an 8-bit controller locked at 4.77 MHz). Refresh (obsolete),. User hardware, usually sound card 8-bit DMA.

controller,. (obsoleted by modes, and replaced by modes), Parallel Port (ECP capable port), certain SoundBlaster Clones like the OPTi 928. Cascade to PC/XT DMA controller,. Hard Disk ( only), user hardware for all others, usually sound card 16-bit DMA. User hardware.

User hardware.PCI A architecture has no central DMA controller, unlike ISA. Instead, any PCI component can request control of the bus ('become the ') and request to read from and write to system memory. More precisely, a PCI component requests bus ownership from the PCI bus controller (usually the in a modern PC design), which will if several devices request bus ownership simultaneously, since there can only be one bus master at one time. When the component is granted ownership, it will issue normal read and write commands on the PCI bus, which will be claimed by the bus controller and will be forwarded to the memory controller using a scheme which is specific to every chipset.As an example, on a modern -based PC, the southbridge will forward the transactions to the (which is integrated on the CPU die) using, which will in turn convert them to operations and send them out on the DDR2 memory bus. As a result, there are quite a number of steps involved in a PCI DMA transfer; however, that poses little problem, since the PCI device or PCI bus itself are an order of magnitude slower than the rest of the components (see ).A modern x86 CPU may use more than 4 GB of memory, utilizing (PAE), a 36-bit addressing mode, or the native 64-bit mode of CPUs. In such a case, a device using DMA with a 32-bit address bus is unable to address memory above the 4 GB line. The new (DAC) mechanism, if implemented on both the PCI bus and the device itself, enables 64-bit DMA addressing.

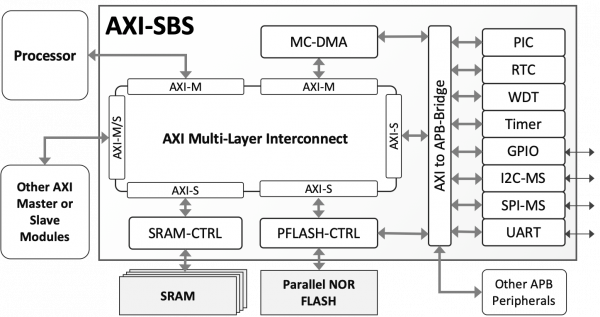

Otherwise, the operating system would need to work around the problem by either using costly (DOS/Windows nomenclature) also known as (/Linux), or it could use an to provide address translation services if one is present.I/OAT As an example of DMA engine incorporated in a general-purpose CPU, newer Intel chipsets include a DMA engine called (I/OAT), which can offload memory copying from the main CPU, freeing it to do other work. In 2006, Intel's developer Andrew Grover performed benchmarks using I/OAT to offload network traffic copies and found no more than 10% improvement in CPU utilization with receiving workloads. DDIO Further performance-oriented enhancements to the DMA mechanism have been introduced in Intel processors with their Data Direct I/O ( DDIO) feature, allowing the DMA 'windows' to reside within instead of system RAM. As a result, CPU caches are used as the primary source and destination for I/O, allowing (NICs) to DMA directly to the Last level cache of local CPUs and avoid costly fetching of the I/O data from system RAM. As a result, DDIO reduces the overall I/O processing latency, allows processing of the I/O to be performed entirely in-cache, prevents the available RAM bandwidth/latency from becoming a performance bottleneck, and may lower the power consumption by allowing RAM to remain longer in low-powered state. Main article:In and, typical system bus infrastructure is a complex on-chip bus such as. AMBA defines two kinds of AHB components: master and slave.

A slave interface is similar to programmed I/O through which the software (running on embedded CPU, e.g. ) can write/read I/O registers or (less commonly) local memory blocks inside the device. A master interface can be used by the device to perform DMA transactions to/from system memory without heavily loading the CPU.Therefore, high bandwidth devices such as network controllers that need to transfer huge amounts of data to/from system memory will have two interface adapters to the AHB: a master and a slave interface. This is because on-chip buses like AHB do not support the bus or alternating the direction of any line on the bus. Like PCI, no central DMA controller is required since the DMA is bus-mastering, but an is required in case of multiple masters present on the system.Internally, a multichannel DMA engine is usually present in the device to perform multiple concurrent operations as programmed by the software.Cell.

Main article:As an example usage of DMA in a, IBM/Sony/Toshiba's incorporates a DMA engine for each of its 9 processing elements including one Power processor element (PPE) and eight synergistic processor elements (SPEs). Since the SPE's load/store instructions can read/write only its own local memory, an SPE entirely depends on DMAs to transfer data to and from the main memory and local memories of other SPEs. Thus the DMA acts as a primary means of data transfer among cores inside this (in contrast to cache-coherent CMP architectures such as Intel's cancelled, ).DMA in Cell is fully (note however local stores of SPEs operated upon by DMA do not act as globally coherent cache in the ). In both read ('get') and write ('put'), a DMA command can transfer either a single block area of size up to 16 KB, or a list of 2 to 2048 such blocks. The DMA command is issued by specifying a pair of a local address and a remote address: for example when a SPE program issues a put DMA command, it specifies an address of its own local memory as the source and a virtual memory address (pointing to either the main memory or the local memory of another SPE) as the target, together with a block size.

According to an experiment, an effective peak performance of DMA in Cell (3 GHz, under uniform traffic) reaches 200 GB per second. Pipelining Processors with and DMA (such as and the processor) may benefit from software overlapping DMA memory operations with processing, via or multibuffering. For example, the on-chip memory is split into two buffers; the processor may be operating on data in one, while the DMA engine is loading and storing data in the other.

This allows the system to avoid and exploit, at the expense of needing a predictable. See also. (VDS)Notes.

Osborne, Adam (1980). Osborne McGraw Hill. Pp. (PDF). JKbox RC702 subsite. Retrieved 20 April 2019. Universidad Nacional de la Plata, Argentina.

Retrieved 20 April 2019. Intel Corp. (2003-04-25), (PDF), PC Architecture for Technicians: Level 1, retrieved 2015-01-27. Microsoft Windows Hardware Development Central. Retrieved 2008-04-07. Corbet, Jonathan (December 8, 2005). Grover, Andrew (2006-06-01).

Overview of I/OAT on Linux, with links to several benchmarks. Retrieved 2006-12-12. (PDF). Retrieved 2015-10-11. Rashid Khan (2015-09-29). Retrieved 2015-10-11. (PDF).

Retrieved 2015-10-11. Alexander Duyck (2015-08-19). Retrieved 2015-10-11. Kistler, Michael (May 2006). Extensive benchmarks of DMA performance in Cell Broadband Engine.References.